library(easystats)

library(tidyverse)

library(rstanarm) # optional!

library(ggpubr) # data visualization4 Modelling and regression

4.1 R packages needed for this chapter

4.2 What’s modelling?

Read this great introduction by modelling by Russel Poldrack. Actually, the whole book is nice Poldrack (2022).

An epitome of modelling is this, let’s call it the fundamental modelling equation, a bit grandiose but at the point, see Equation 4.1.

The data can be separated in the model’s prediction and the rest (the “error”), i.e., what’s unaccounted for by the model.

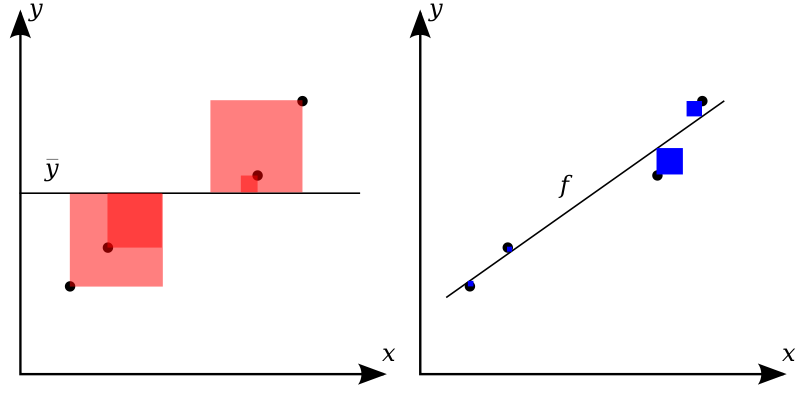

A more visual account of our basic modelling equation is depicted in Figure 4.1.

4.3 Regression as the umbrella tool for modelling

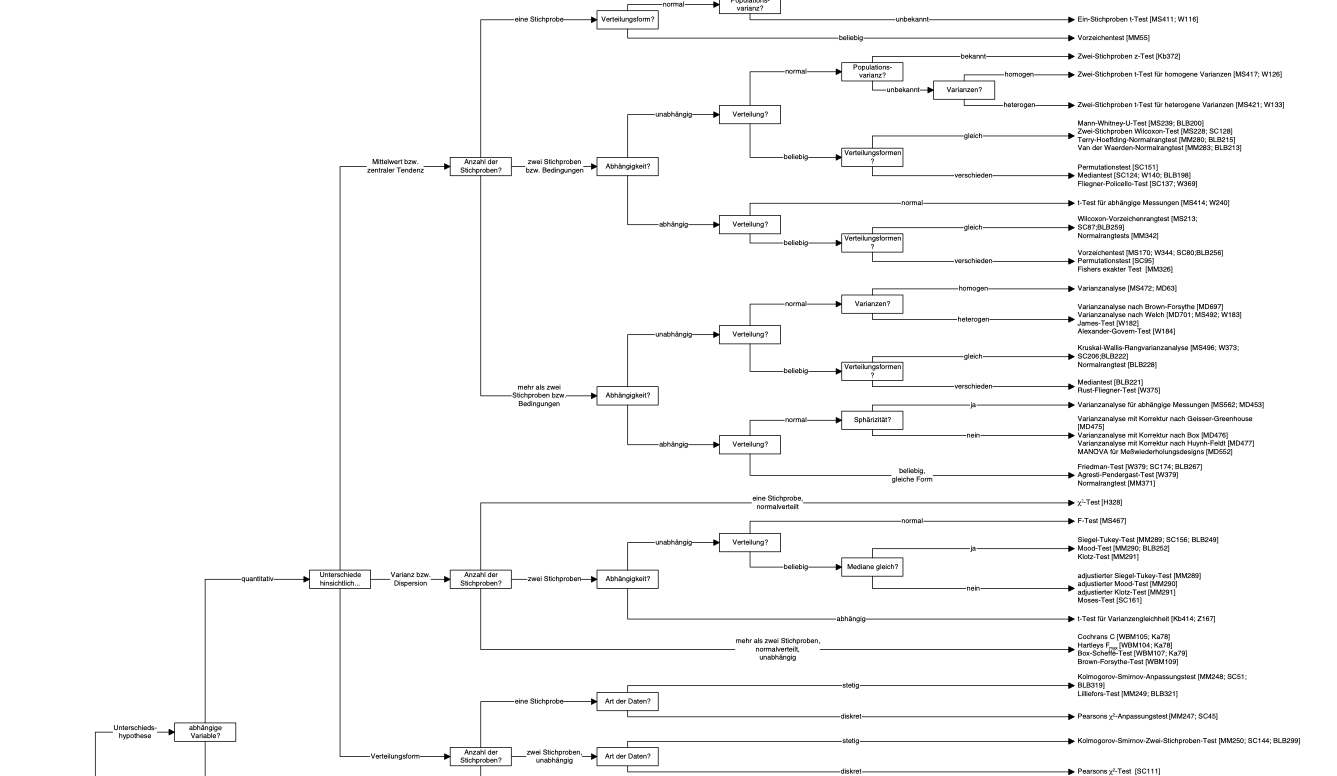

Alternatively, venture into the forest of statistical tests as outlined e.g. here, at Uni Muenster. Proceed at your own peril.

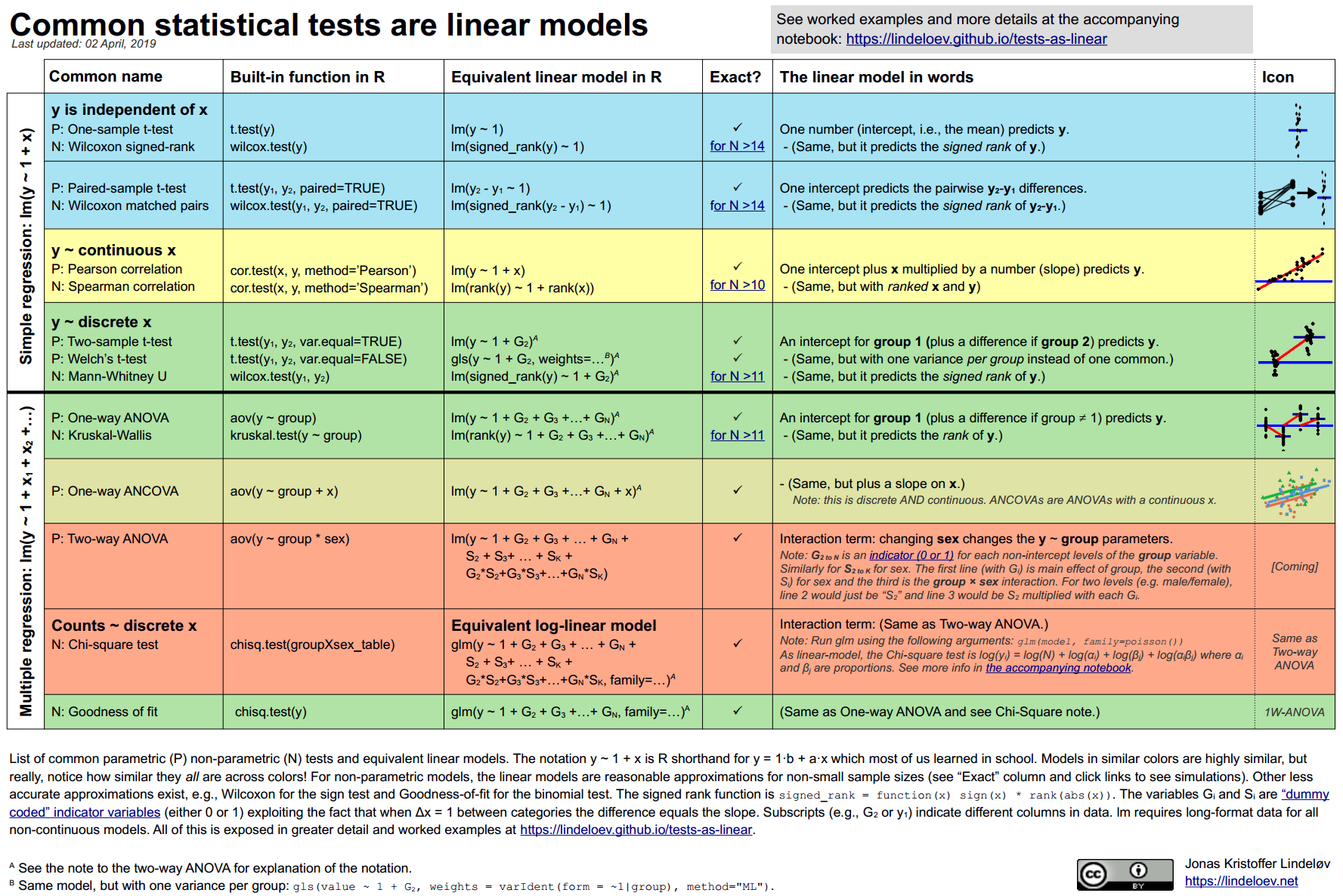

You may want to ponder on this image of a decision tree of which test to choose, see Figure Figure 4.2.

4.3.1 Common statistical tests are linear models

As Jonas Kristoffer Lindeløv tells us, we can formulate most statistical tests as a linear model, ie., a regression.

4.3.2 How to find the regression line

In the simplest case, regression analyses can be interpreted geometrically as a line in a 2D coordinate system, see Figure Figure 4.3.

Source: Orzetoo, CC-SA, Wikimedia

Put simple, we are looking for the line which is in the “middle of the points”. More precisely, we place the line such that the squared distances from the line to the points is minimal, see Figre Figure 4.3.

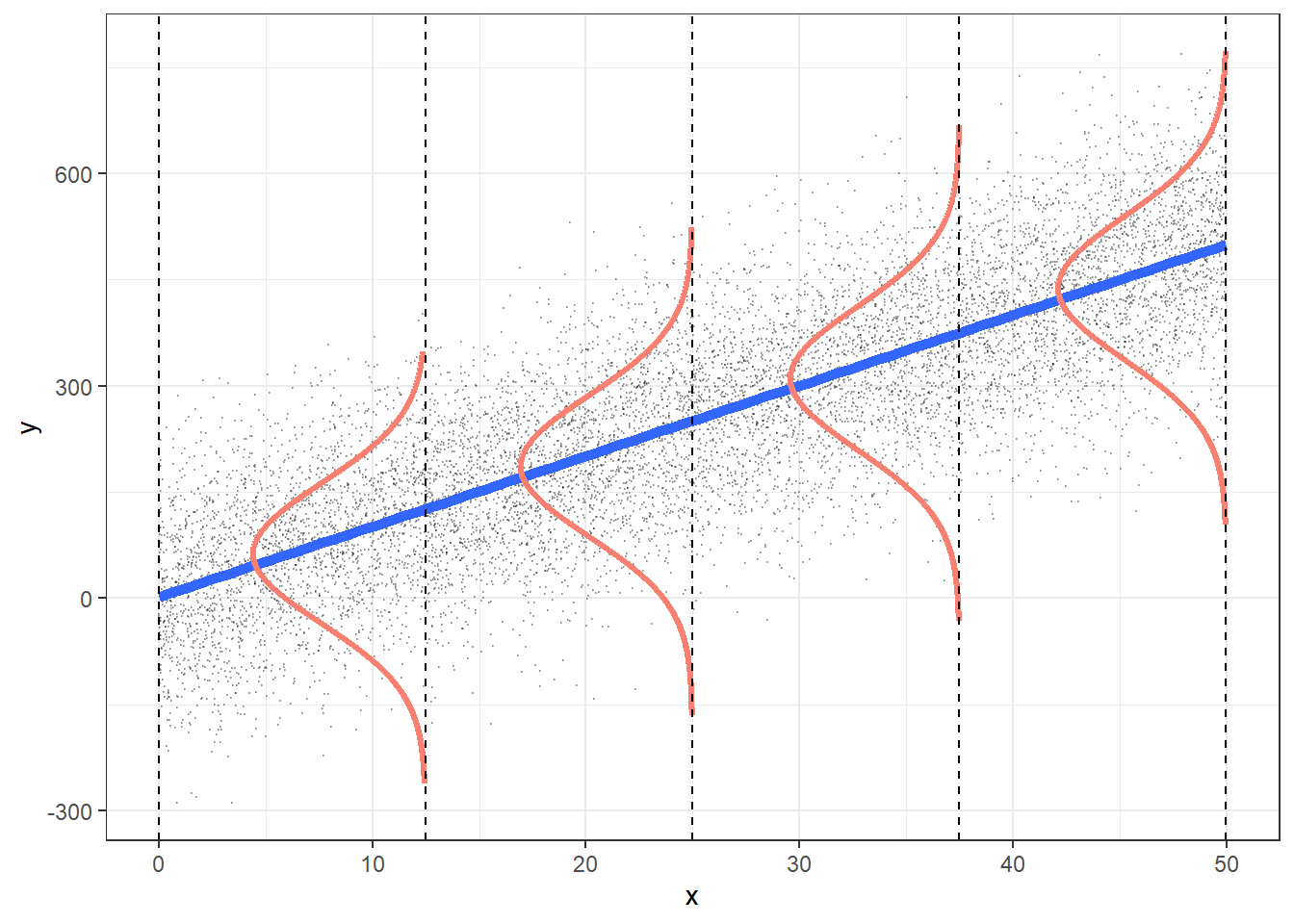

Consider Figure Figure 4.4, from this source by Roback & Legler (2021). It visualizes not only the notorious regression line, but also sheds light on regression assumptions, particularly on the error distribution.

Among the assumptions of the linear model are:

- linearity of the function

- variance of

- normality of residuals

4.3.3 The linear model

Here’s the canonical form of the linear model.

Consider a model with

4.3.4 Algebraic derivation

For the mathematical inclined, check out this derivation of the simple case regression model. Note that the article is written in German, but your browser can effortlessly translate into English. Here’s a similar English article from StackExchange.

4.4 In all its glory

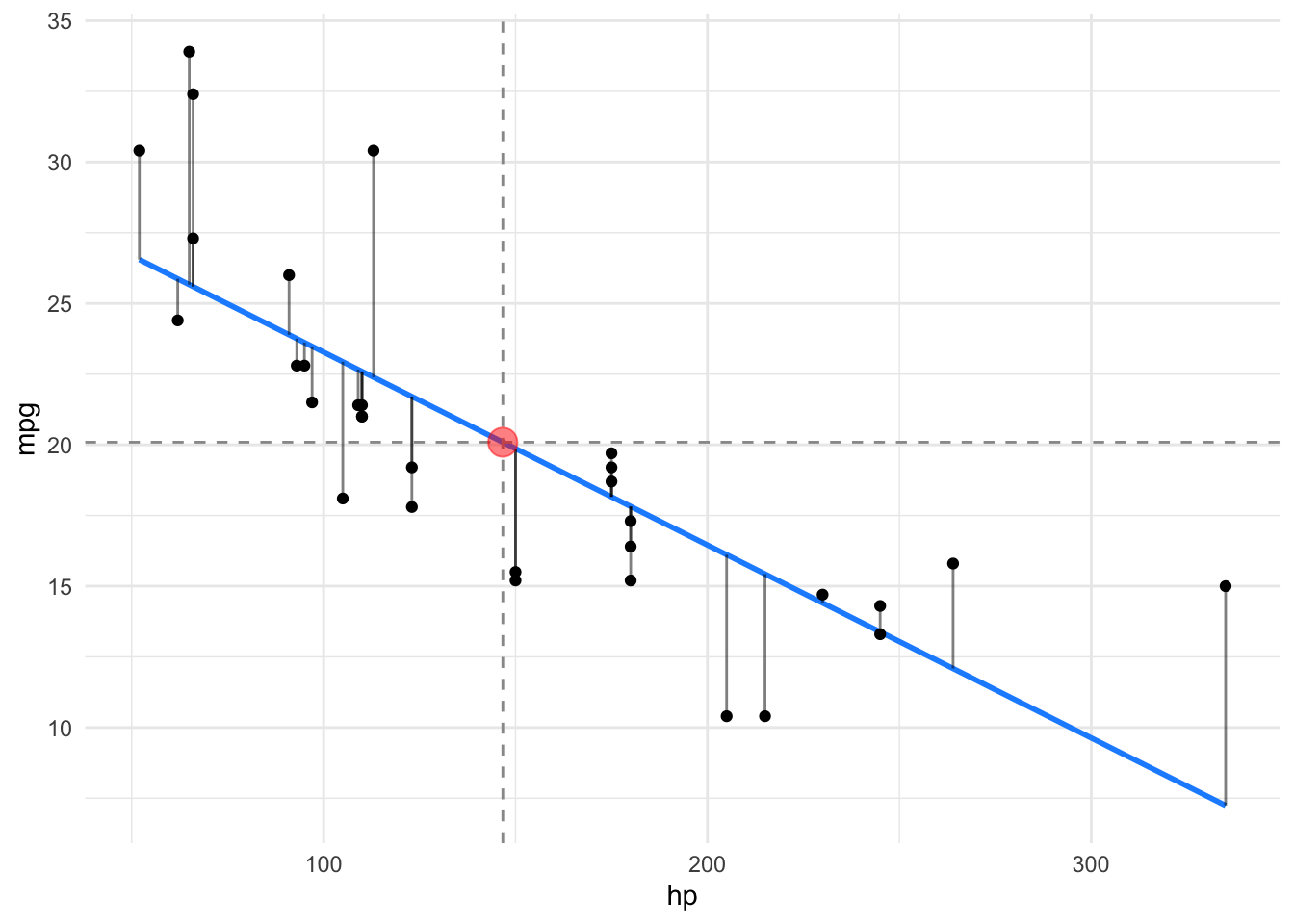

Let’s depict the residuals, s. Figure 4.5.

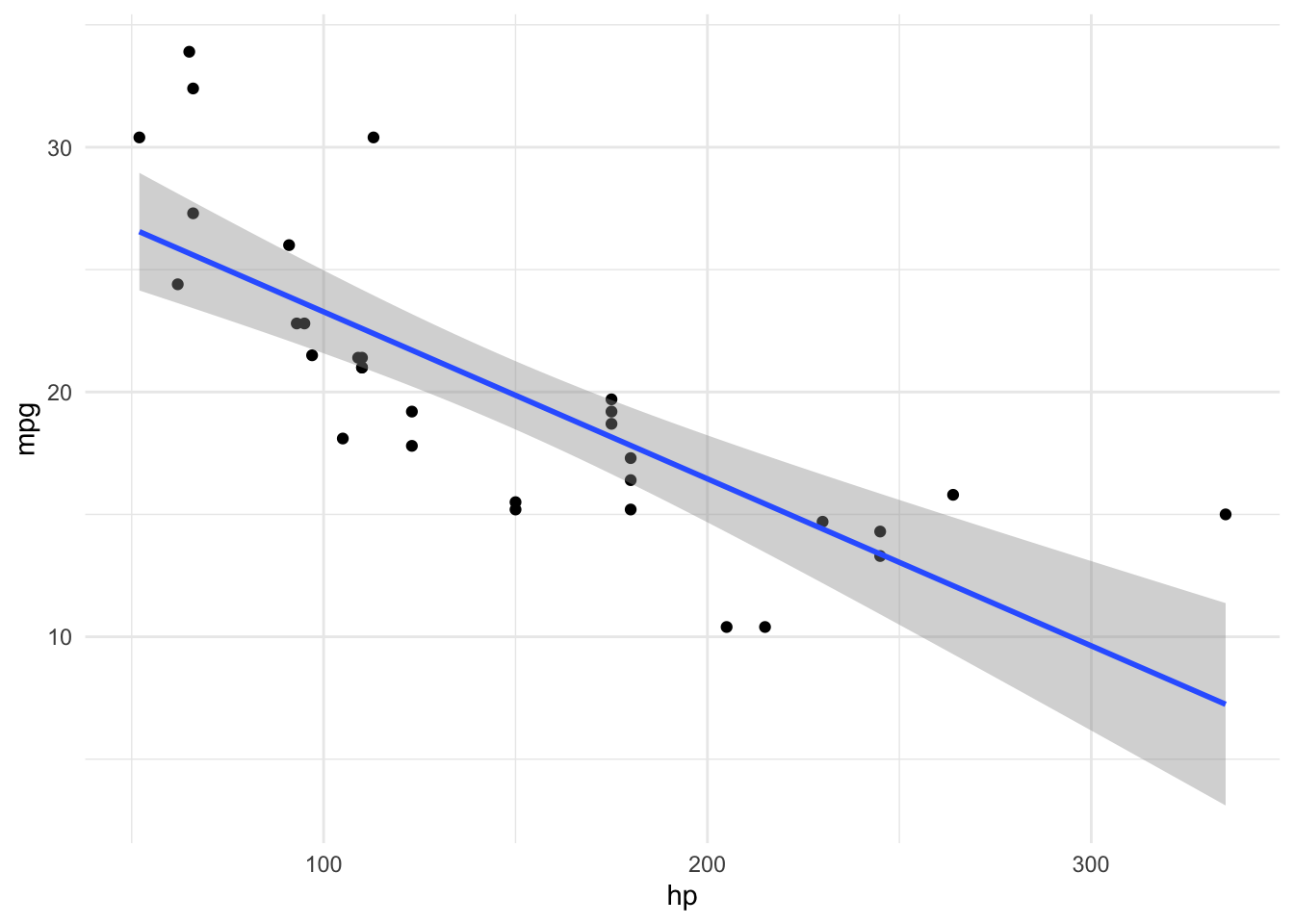

4.5 First model: one metric predictor

All the R-code for each chapter can be found as pure, R-only files here.

First, let’s load some data:

data(mtcars)

glimpse(mtcars)Rows: 32

Columns: 11

$ mpg <dbl> 21.0, 21.0, 22.8, 21.4, 18.7, 18.1, 14.3, 24.4, 22.8, 19.2, 17.8,…

$ cyl <dbl> 6, 6, 4, 6, 8, 6, 8, 4, 4, 6, 6, 8, 8, 8, 8, 8, 8, 4, 4, 4, 4, 8,…

$ disp <dbl> 160.0, 160.0, 108.0, 258.0, 360.0, 225.0, 360.0, 146.7, 140.8, 16…

$ hp <dbl> 110, 110, 93, 110, 175, 105, 245, 62, 95, 123, 123, 180, 180, 180…

$ drat <dbl> 3.90, 3.90, 3.85, 3.08, 3.15, 2.76, 3.21, 3.69, 3.92, 3.92, 3.92,…

$ wt <dbl> 2.620, 2.875, 2.320, 3.215, 3.440, 3.460, 3.570, 3.190, 3.150, 3.…

$ qsec <dbl> 16.46, 17.02, 18.61, 19.44, 17.02, 20.22, 15.84, 20.00, 22.90, 18…

$ vs <dbl> 0, 0, 1, 1, 0, 1, 0, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 0,…

$ am <dbl> 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 0, 0,…

$ gear <dbl> 4, 4, 4, 3, 3, 3, 3, 4, 4, 4, 4, 3, 3, 3, 3, 3, 3, 4, 4, 4, 3, 3,…

$ carb <dbl> 4, 4, 1, 1, 2, 1, 4, 2, 2, 4, 4, 3, 3, 3, 4, 4, 4, 1, 2, 1, 1, 2,…4.5.1 Frequentist

Define and fit the model:

lm1_freq <- lm(mpg ~ hp, data = mtcars)Get the parameter values:

parameters(lm1_freq)| Parameter | Coefficient | SE | CI | CI_low | CI_high | t | df_error | p |

|---|---|---|---|---|---|---|---|---|

| (Intercept) | 30.0988605 | 1.6339210 | 0.95 | 26.7619488 | 33.4357723 | 18.421246 | 30 | 0e+00 |

| hp | -0.0682283 | 0.0101193 | 0.95 | -0.0888947 | -0.0475619 | -6.742388 | 30 | 2e-07 |

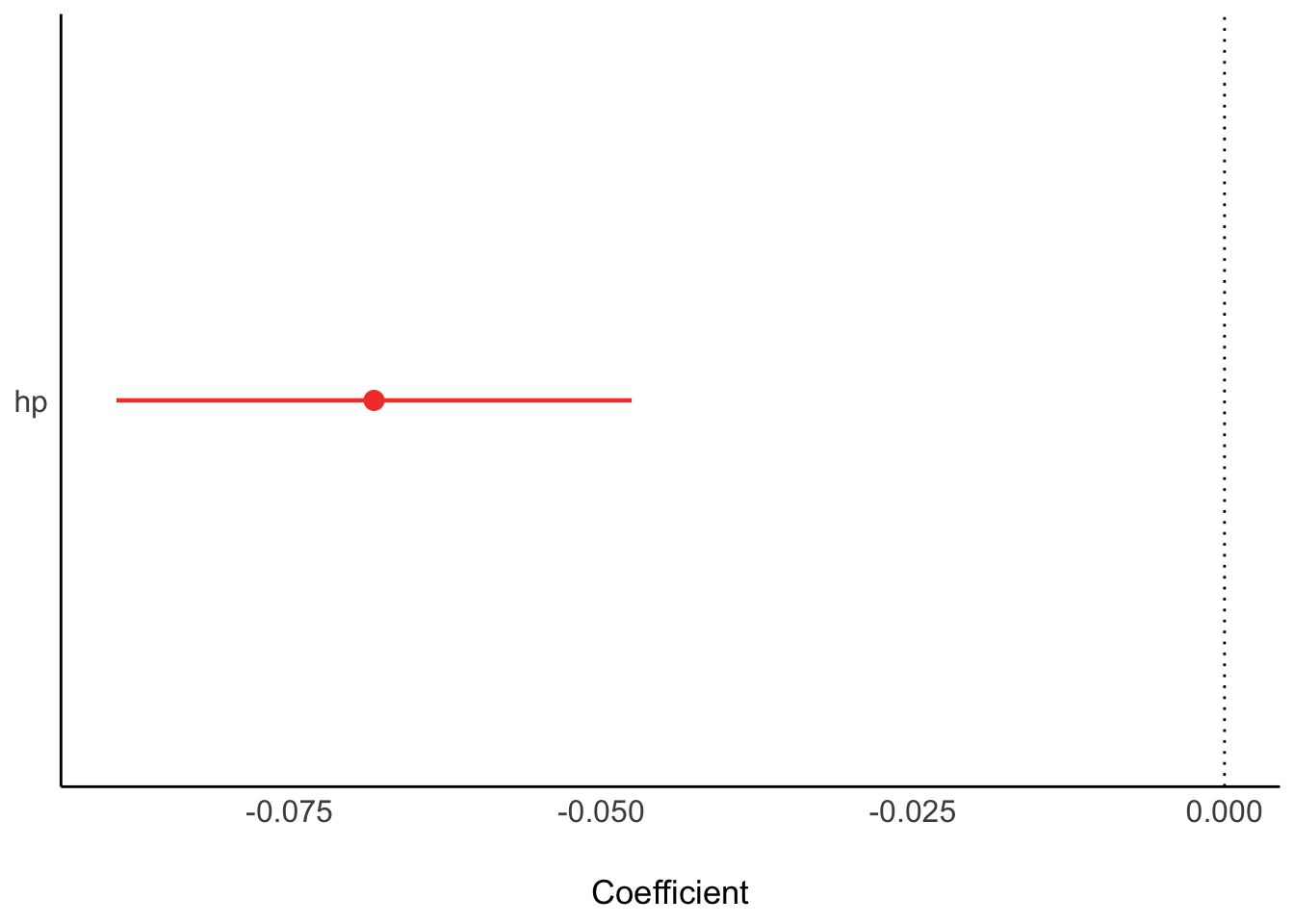

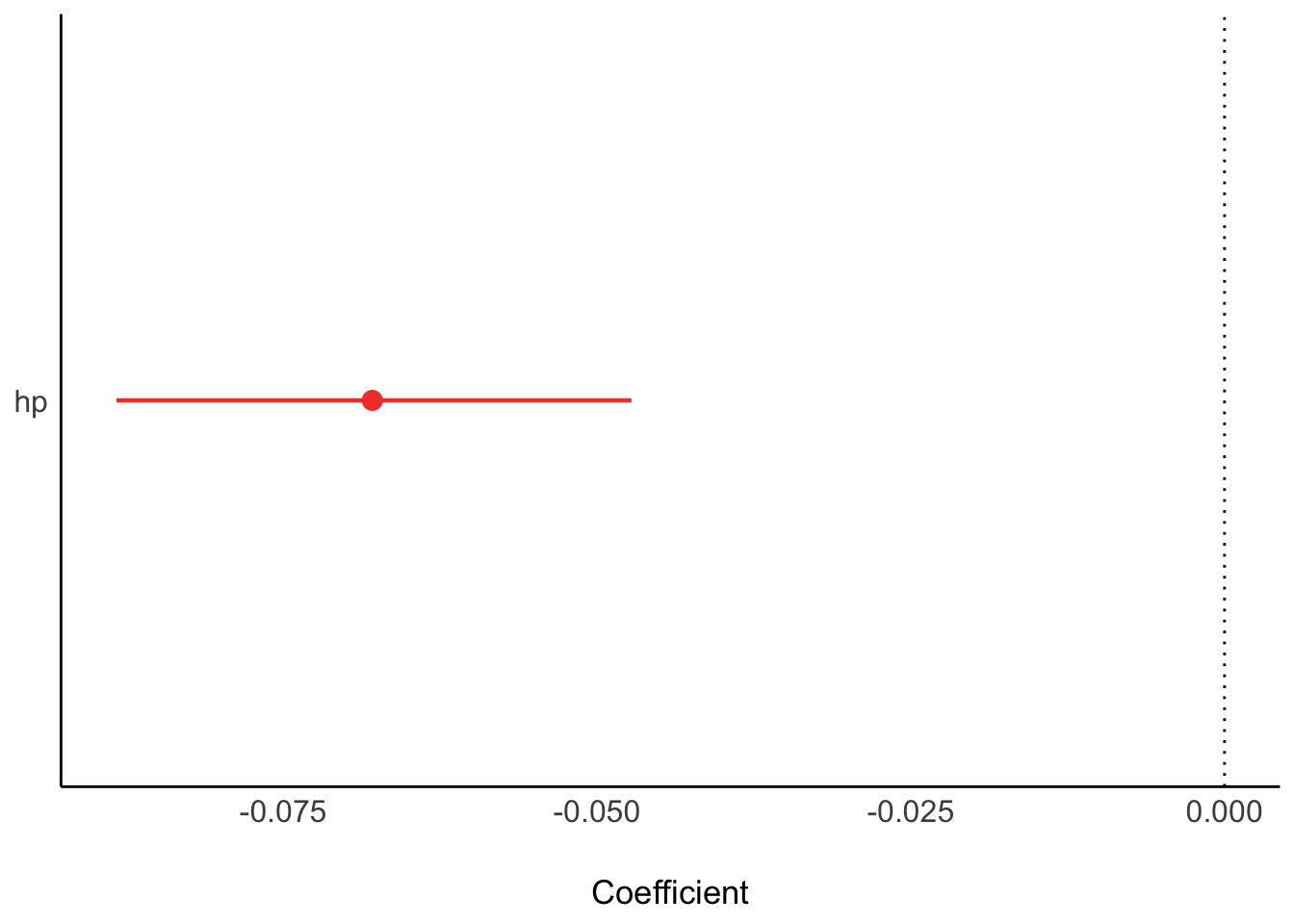

Plot the model parameters:

plot(parameters(lm1_freq))

4.5.2 Bayesian

lm1_bayes <- stan_glm(mpg ~ hp, data = mtcars)

SAMPLING FOR MODEL 'continuous' NOW (CHAIN 1).

Chain 1:

Chain 1: Gradient evaluation took 0.00513 seconds

Chain 1: 1000 transitions using 10 leapfrog steps per transition would take 51.3 seconds.

Chain 1: Adjust your expectations accordingly!

Chain 1:

Chain 1:

Chain 1: Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 1: Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 1: Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 1: Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 1: Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 1: Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 1: Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 1: Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 1: Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 1: Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 1: Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 1: Iteration: 2000 / 2000 [100%] (Sampling)

Chain 1:

Chain 1: Elapsed Time: 0.056 seconds (Warm-up)

Chain 1: 0.053 seconds (Sampling)

Chain 1: 0.109 seconds (Total)

Chain 1:

SAMPLING FOR MODEL 'continuous' NOW (CHAIN 2).

Chain 2:

Chain 2: Gradient evaluation took 1.6e-05 seconds

Chain 2: 1000 transitions using 10 leapfrog steps per transition would take 0.16 seconds.

Chain 2: Adjust your expectations accordingly!

Chain 2:

Chain 2:

Chain 2: Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 2: Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 2: Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 2: Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 2: Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 2: Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 2: Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 2: Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 2: Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 2: Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 2: Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 2: Iteration: 2000 / 2000 [100%] (Sampling)

Chain 2:

Chain 2: Elapsed Time: 0.049 seconds (Warm-up)

Chain 2: 0.047 seconds (Sampling)

Chain 2: 0.096 seconds (Total)

Chain 2:

SAMPLING FOR MODEL 'continuous' NOW (CHAIN 3).

Chain 3:

Chain 3: Gradient evaluation took 5.3e-05 seconds

Chain 3: 1000 transitions using 10 leapfrog steps per transition would take 0.53 seconds.

Chain 3: Adjust your expectations accordingly!

Chain 3:

Chain 3:

Chain 3: Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 3: Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 3: Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 3: Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 3: Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 3: Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 3: Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 3: Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 3: Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 3: Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 3: Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 3: Iteration: 2000 / 2000 [100%] (Sampling)

Chain 3:

Chain 3: Elapsed Time: 0.052 seconds (Warm-up)

Chain 3: 0.044 seconds (Sampling)

Chain 3: 0.096 seconds (Total)

Chain 3:

SAMPLING FOR MODEL 'continuous' NOW (CHAIN 4).

Chain 4:

Chain 4: Gradient evaluation took 1.7e-05 seconds

Chain 4: 1000 transitions using 10 leapfrog steps per transition would take 0.17 seconds.

Chain 4: Adjust your expectations accordingly!

Chain 4:

Chain 4:

Chain 4: Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 4: Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 4: Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 4: Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 4: Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 4: Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 4: Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 4: Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 4: Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 4: Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 4: Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 4: Iteration: 2000 / 2000 [100%] (Sampling)

Chain 4:

Chain 4: Elapsed Time: 0.049 seconds (Warm-up)

Chain 4: 0.048 seconds (Sampling)

Chain 4: 0.097 seconds (Total)

Chain 4: Actually, we want to suppress some overly verbose output of the sampling, so add the argument refresh = 0:

lm1_bayes <- stan_glm(mpg ~ hp, data = mtcars, refresh = 0)Get the parameter values:

parameters(lm1_bayes)| Parameter | Median | CI | CI_low | CI_high | pd | Rhat | ESS | Prior_Distribution | Prior_Location | Prior_Scale |

|---|---|---|---|---|---|---|---|---|---|---|

| (Intercept) | 30.0258671 | 0.95 | 26.6511691 | 33.4092485 | 1 | 0.9998508 | 3901.253 | normal | 20.09062 | 15.0673701 |

| hp | -0.0677732 | 0.95 | -0.0881377 | -0.0467074 | 1 | 1.0001298 | 3462.203 | normal | 0.00000 | 0.2197599 |

Plot the model parameters:

plot(parameters(lm1_bayes))

4.5.3 Model performance

r2(lm1_freq)# R2 for Linear Regression

R2: 0.602

adj. R2: 0.589r2(lm1_bayes)# Bayesian R2 with Compatibility Interval

Conditional R2: 0.587 (95% CI [0.376, 0.755])4.5.4 Model check

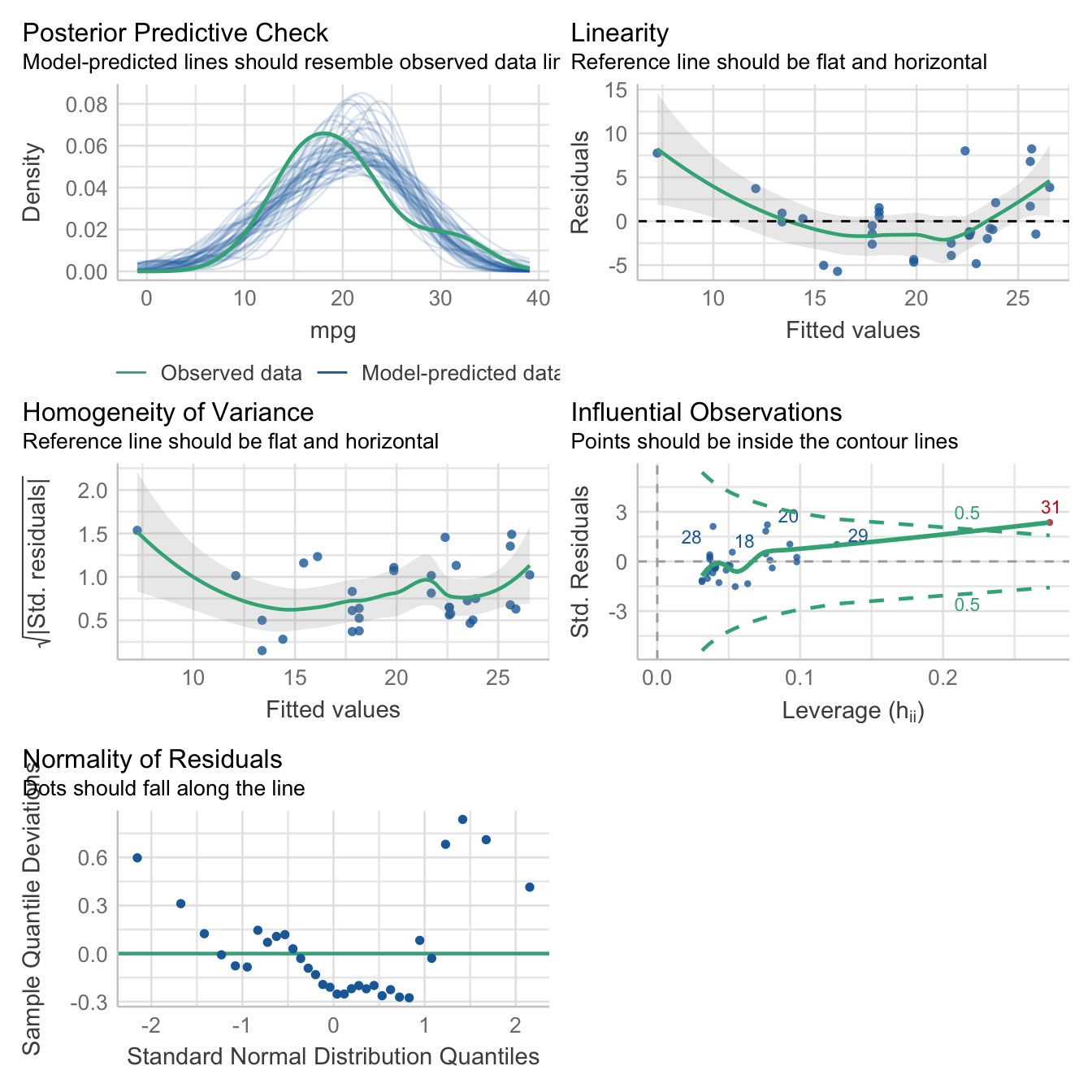

Here’s a bunch of typical model checks in the Frequentist sense.

check_model(lm1_freq)

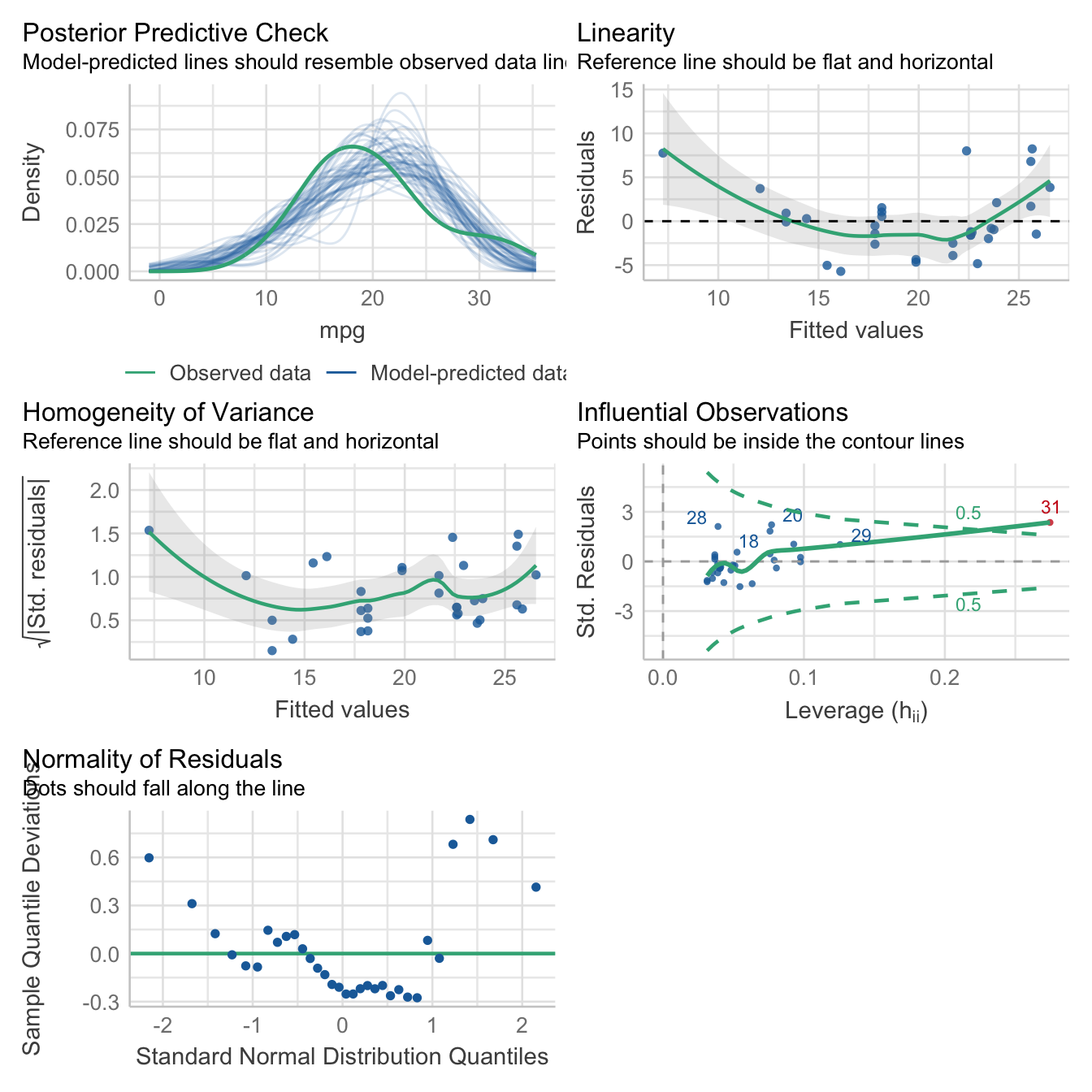

And here are some Bayesian flavored model checks.

check_model(lm1_bayes)

4.5.5 Get some predictions

lm1_pred <- estimate_relation(lm1_freq)

lm1_pred| hp | Predicted | SE | CI_low | CI_high |

|---|---|---|---|---|

| 52.00000 | 26.550990 | 1.1766139 | 24.148024 | 28.95396 |

| 83.44444 | 24.405590 | 0.9358933 | 22.494241 | 26.31694 |

| 114.88889 | 22.260189 | 0.7548971 | 20.718484 | 23.80190 |

| 146.33333 | 20.114789 | 0.6828911 | 18.720139 | 21.50944 |

| 177.77778 | 17.969389 | 0.7518697 | 16.433866 | 19.50491 |

| 209.22222 | 15.823989 | 0.9310065 | 13.922620 | 17.72536 |

| 240.66667 | 13.678588 | 1.1707841 | 11.287528 | 16.06965 |

| 272.11111 | 11.533188 | 1.4412478 | 8.589767 | 14.47661 |

| 303.55556 | 9.387788 | 1.7280486 | 5.858642 | 12.91693 |

| 335.00000 | 7.242387 | 2.0242544 | 3.108308 | 11.37647 |

More details on the above function can be found on the respective page at the easystats site.

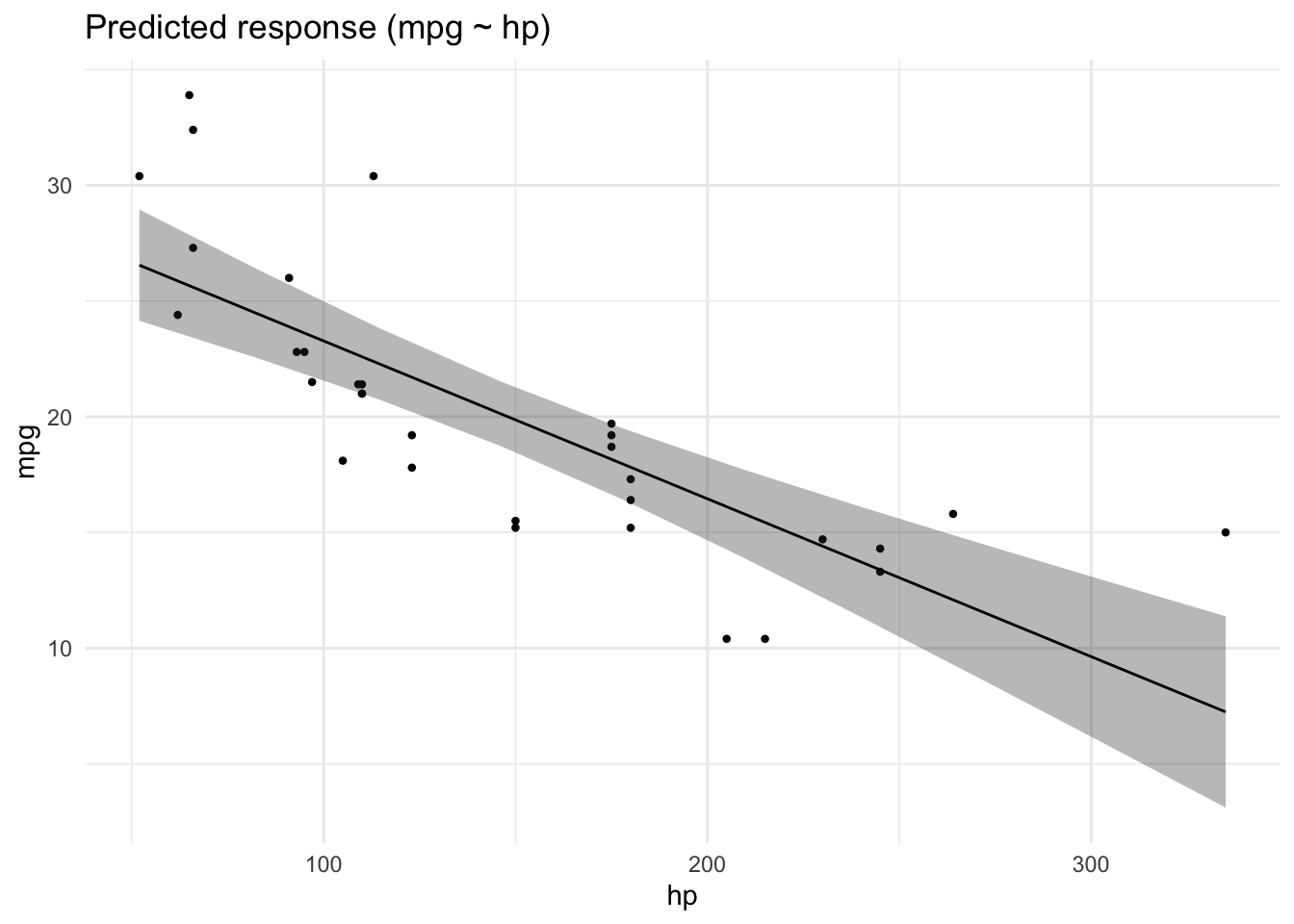

4.5.6 Plot the model

plot(lm1_pred)

4.5.7 More of this

More technical details for gauging model performance and model quality, can be found on the site of the R package “performance at the easystats site.

4.5.8 Exercises

4.5.9 Lab

Run a simple regression on your own research data. Present the results. Did you encounter any glitches?

4.6 Bayes-members only

Bayes statistics provide a distribution as the result of the analysis, the posterior distribution, which provides us with quite some luxury.

As the posterior distribution manifests itself by a number of samples, we can easily filter and manipulate this sample distribution in order to ask some interesing questions.

See

lm1_bayes_tibble <- as_tibble(lm1_bayes) # cast as a tibble (table)

head(lm1_bayes_tibble) # show the first few rows| (Intercept) | hp | sigma |

|---|---|---|

| 30.36084 | -0.0670639 | 3.870440 |

| 29.70206 | -0.0661331 | 4.035431 |

| 31.04928 | -0.0745350 | 3.940575 |

| 29.71194 | -0.0633953 | 3.836345 |

| 26.95708 | -0.0466278 | 3.907002 |

| 31.68332 | -0.0781663 | 3.660311 |

4.6.1 Asking for probabilites

What’s the probability that the effect of hp is negative?

lm1_bayes_tibble %>%

count(hp < 0)| hp < 0 | n |

|---|---|

| TRUE | 4000 |

Feel free to ask similar questions!

4.6.2 Asking for quantiles

With a given probability of, say 90%, how large is the effect of hp?

quantile(lm1_bayes_tibble$hp, .9) 90%

-0.05429705 What’s the smallest 95% percent interval for the effect of hp?

hdi(lm1_bayes)| Parameter | CI | CI_low | CI_high | Effects | Component |

|---|---|---|---|---|---|

| (Intercept) | 0.95 | 26.8196810 | 33.5375524 | fixed | conditional |

| hp | 0.95 | -0.0887995 | -0.0475132 | fixed | conditional |

In case you prefer 89% intervals (I do!):

hdi(lm1_bayes, ci = .89)| Parameter | CI | CI_low | CI_high | Effects | Component |

|---|---|---|---|---|---|

| (Intercept) | 0.89 | 27.336389 | 32.7313829 | fixed | conditional |

| hp | 0.89 | -0.085595 | -0.0526822 | fixed | conditional |

4.6.3 Model specification

In Bayes statistics, it is customary to specify the model in something like the following way:

In this specification,

4.6.4 Prediction interval

A prediction interval answers the following question

How large is the uncertainty in

associated with a given obersation? What interval of values should I expect for a randomly chosen observation?

For example, what’s the uncertainty attached to the fuel economy of a car with 100 hp?

estimate_prediction(model = lm1_bayes,

data = tibble(hp = 100) )| hp | Predicted | SE | CI_low | CI_high |

|---|---|---|---|---|

| 100 | 23.23795 | 4.096616 | 15.25316 | 31.36842 |

4.6.5 … And more

We could even ask intriguing questions such as

Given the model, and given two random observations, one from the experimental group and one from the control group, what is the probability that observation 1 has a higher value in

than observation 2 has?

Note that we are not only asking for “typical” observations as predicted by the model but we are also taking into account the uncertainty of the prediction for each group. Hence, this kind of questions is likely to yield more realistic (and less clear-cut) answers than just asking for the typical value. In other words, such analyses draw on the posterior predictive distribution.

4.7 More linear models

4.7.1 Multiple metric predictors

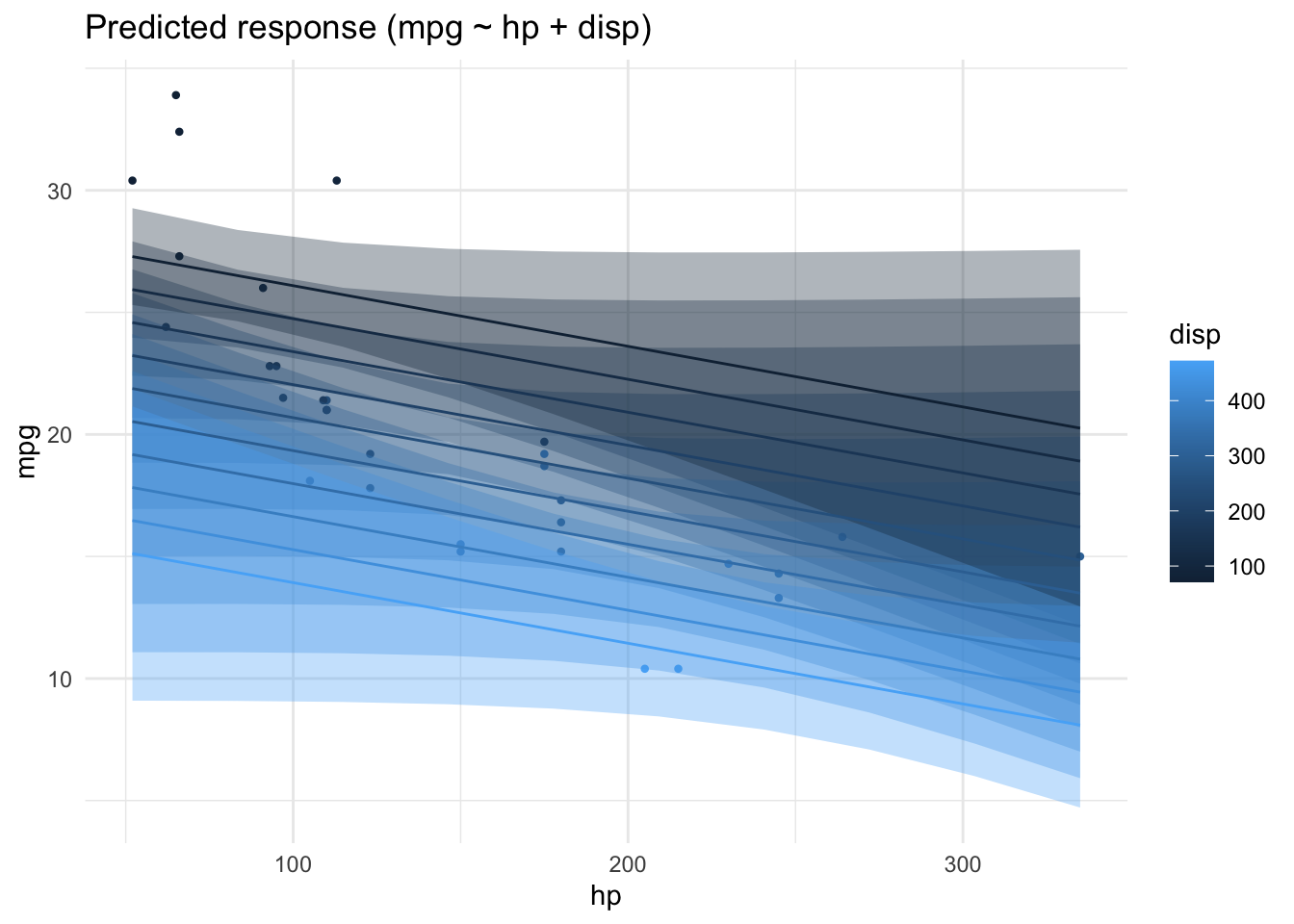

Assume we have a theory that dictates that fuel economy is a (causal) function of horse power and engine displacement.

lm2_freq <- lm(mpg ~ hp + disp, data = mtcars)

parameters(lm2_freq)| Parameter | Coefficient | SE | CI | CI_low | CI_high | t | df_error | p |

|---|---|---|---|---|---|---|---|---|

| (Intercept) | 30.7359042 | 1.3315661 | 0.95 | 28.0125457 | 33.4592628 | 23.082522 | 29 | 0.0000000 |

| hp | -0.0248401 | 0.0133855 | 0.95 | -0.0522165 | 0.0025363 | -1.855746 | 29 | 0.0736791 |

| disp | -0.0303463 | 0.0074049 | 0.95 | -0.0454909 | -0.0152016 | -4.098159 | 29 | 0.0003063 |

Similarly for Bayes inference:

lm2_bayes <- stan_glm(mpg ~ hp + disp, data = mtcars)Results

parameters(lm2_bayes)| Parameter | Median | CI | CI_low | CI_high | pd | Rhat | ESS | Prior_Distribution | Prior_Location | Prior_Scale |

|---|---|---|---|---|---|---|---|---|---|---|

| (Intercept) | 30.7401922 | 0.95 | 27.8998921 | 33.3325232 | 1.00000 | 0.9993953 | 4172.592 | normal | 20.09062 | 15.0673701 |

| hp | -0.0246551 | 0.95 | -0.0512410 | 0.0029018 | 0.95975 | 0.9997783 | 2066.124 | normal | 0.00000 | 0.2197599 |

| disp | -0.0304618 | 0.95 | -0.0456687 | -0.0155818 | 1.00000 | 1.0005632 | 2063.119 | normal | 0.00000 | 0.1215712 |

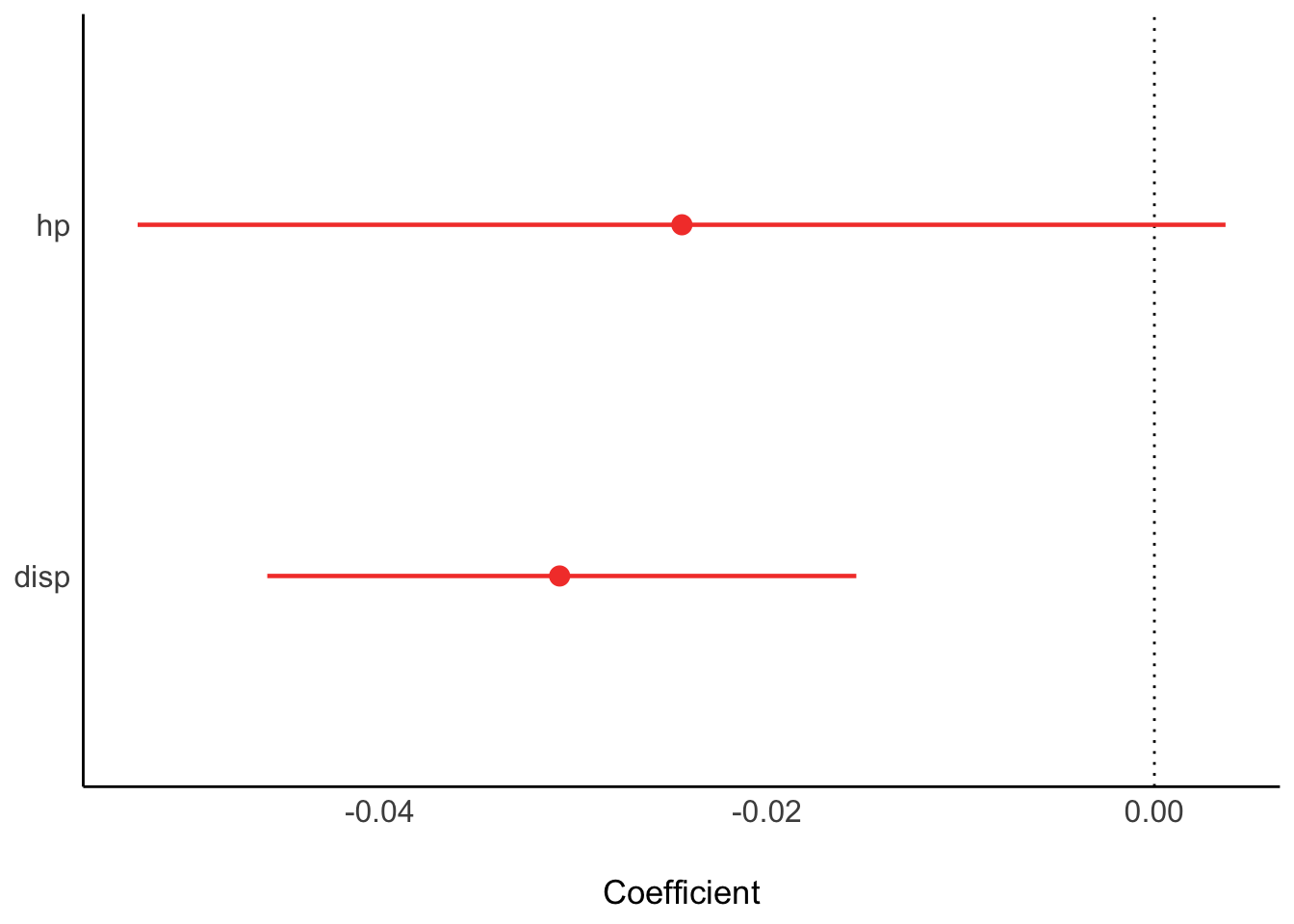

plot(parameters(lm2_bayes))

r2(lm2_bayes)# Bayesian R2 with Compatibility Interval

Conditional R2: 0.730 (95% CI [0.576, 0.845])Depending on the value of disp the prediction of mpg from hp will vary:

lm2_pred <- estimate_relation(lm2_freq)

plot(lm2_pred)

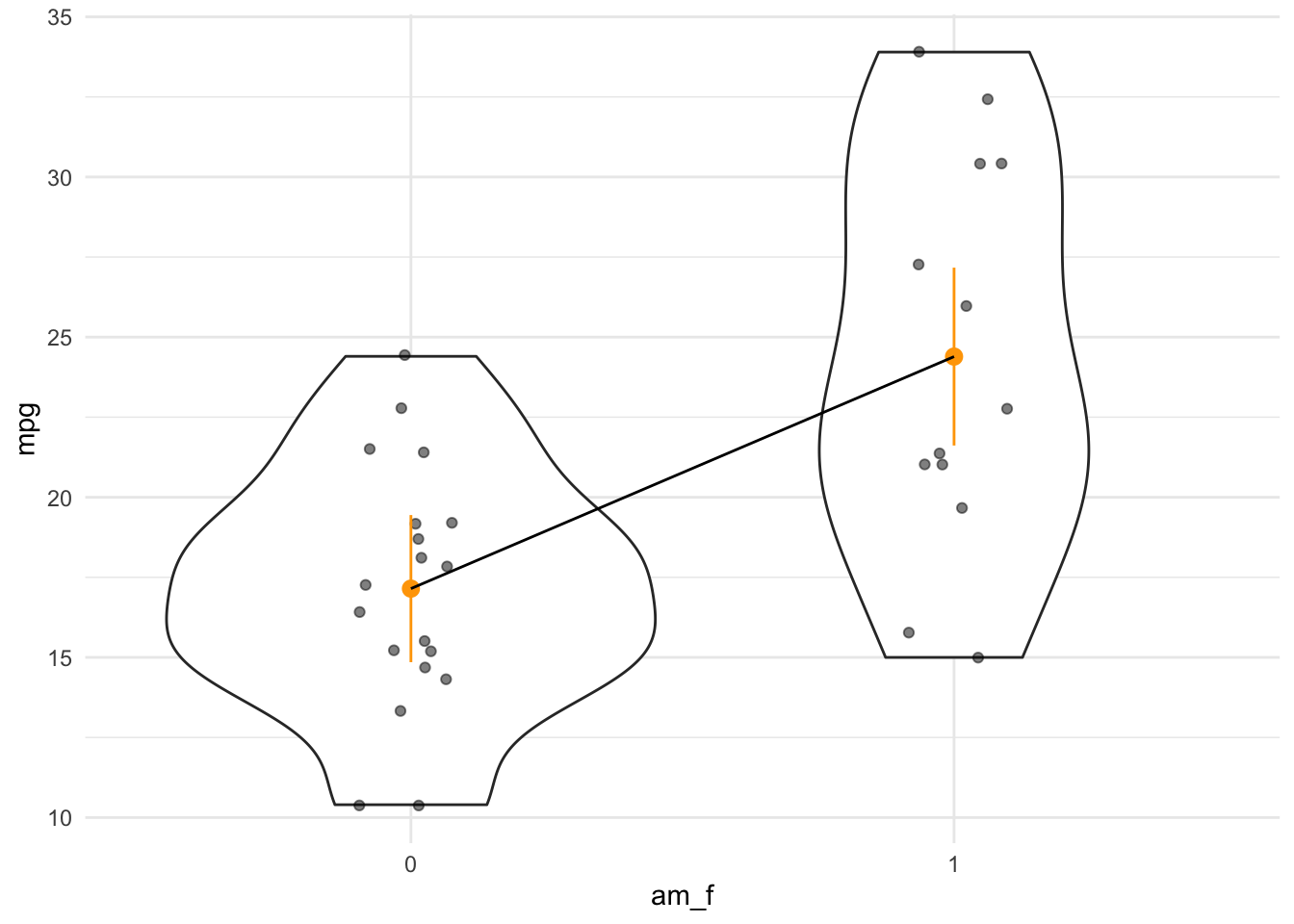

4.7.2 One nominal predictor

lm3a <- lm(mpg ~ am, data = mtcars)

parameters(lm3a)| Parameter | Coefficient | SE | CI | CI_low | CI_high | t | df_error | p |

|---|---|---|---|---|---|---|---|---|

| (Intercept) | 17.147368 | 1.124602 | 0.95 | 14.85062 | 19.44411 | 15.247492 | 30 | 0.000000 |

| am | 7.244939 | 1.764422 | 0.95 | 3.64151 | 10.84837 | 4.106127 | 30 | 0.000285 |

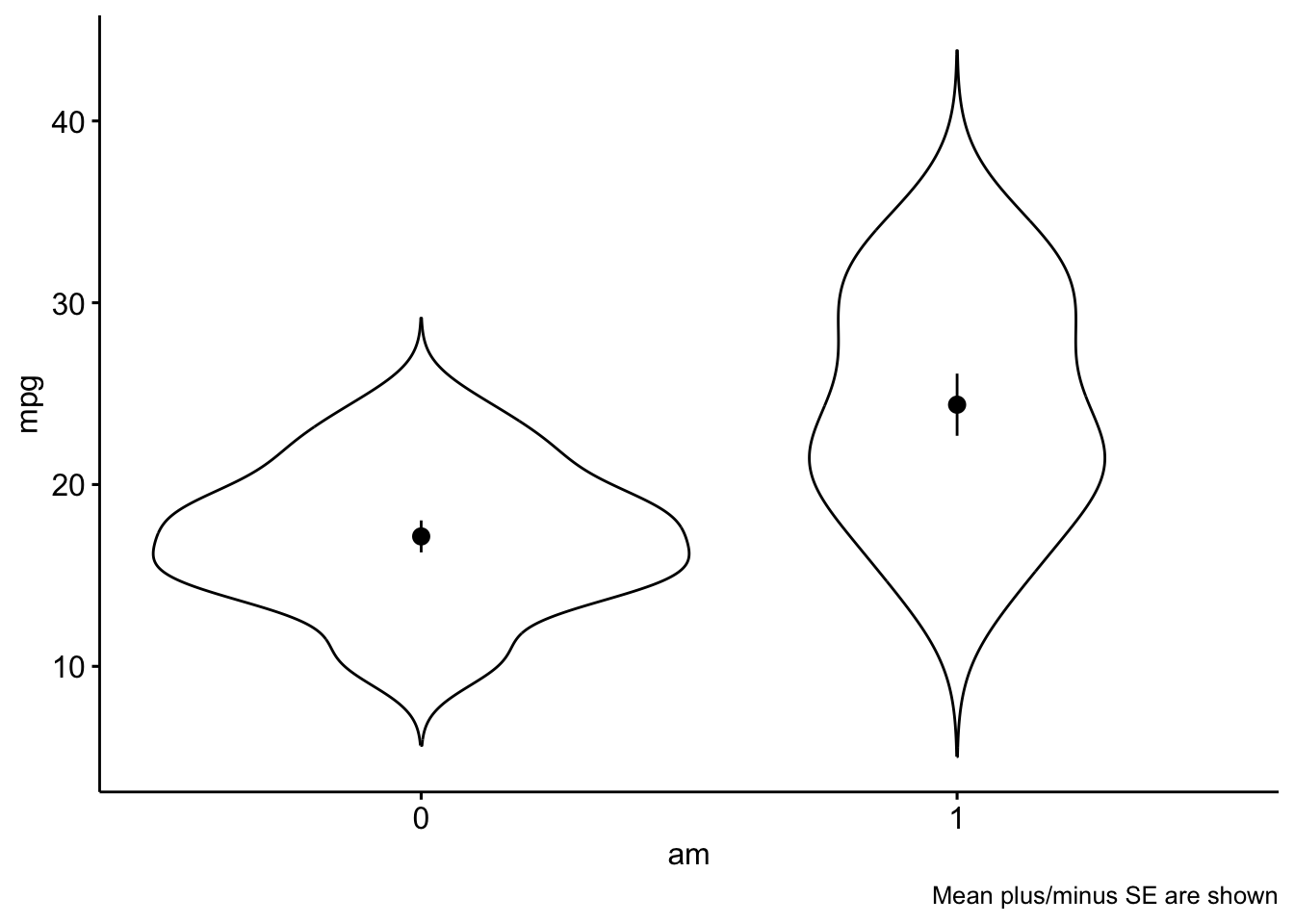

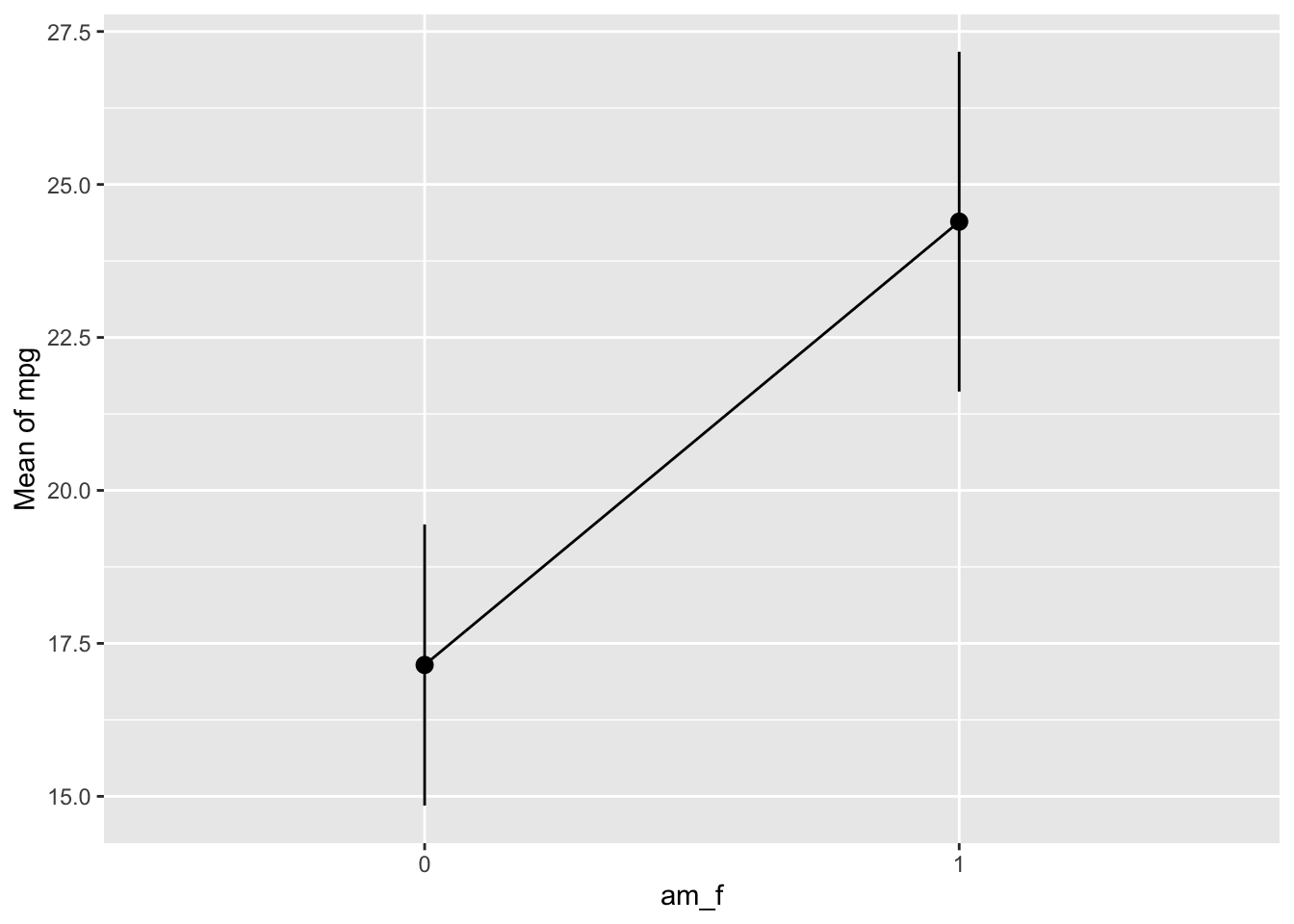

A linear model with one predictor having two values amounts to nothing more than comparing the means of two groups:

ggviolin(mtcars, x = "am", y = "mpg",

add = "mean_se") +

labs(caption = "Mean plus/minus SE are shown")

Let’s compute the mean values per group:

mtcars |>

group_by(am) |>

describe_distribution(select = "mpg")| Variable | Mean | SD | IQR | Min | Max | Skewness | Kurtosis | n | n_Missing | .group |

|---|---|---|---|---|---|---|---|---|---|---|

| mpg | 17.14737 | 3.833966 | 4.50 | 10.4 | 24.4 | 0.0164578 | -0.3339353 | 19 | 0 | am=0 |

| mpg | 24.39231 | 6.166504 | 10.05 | 15.0 | 33.9 | 0.0672942 | -1.1586082 | 13 | 0 | am=1 |

However, unfortunately, the plot of the model needs a nominal variable if we are to compare groups. In our case, am is considered a numeric variables, since it consists of numbers only. The plot does not work as expected, malheureusement:

plot(estimate_relation(lm3a))

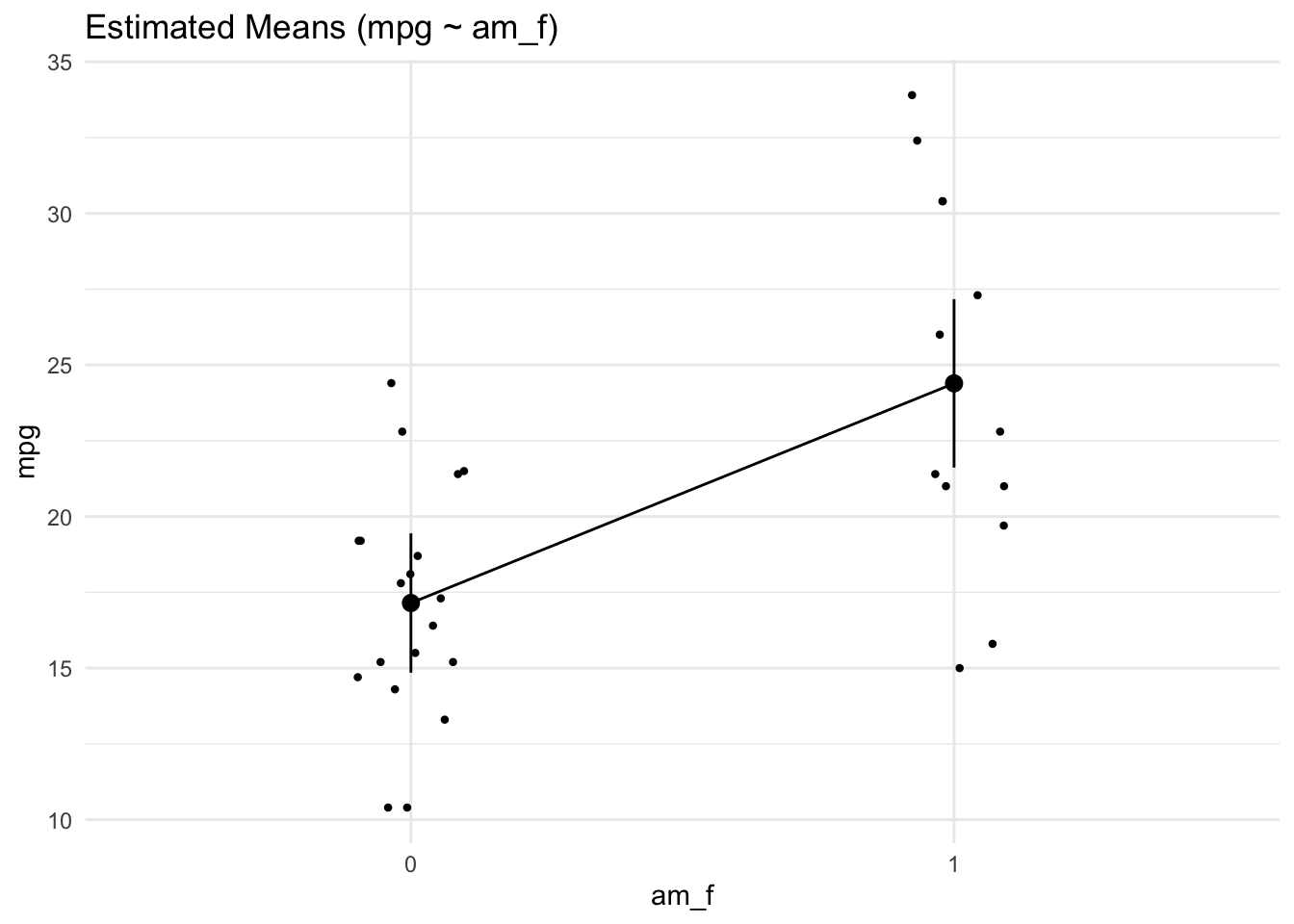

plot(lm3a_means)Error: object 'lm3a_means' not foundWe need to transform am to a factor variable. That’s something like a string (text) variable. If we hand over a factor() to the plotting function, everything will run smoothly. Computationwise, no big differences:

mtcars2 <-

mtcars %>%

mutate(am_f = factor(am))

lm3a <- lm(mpg ~ am_f, data = mtcars2)

parameters(lm3a)| Parameter | Coefficient | SE | CI | CI_low | CI_high | t | df_error | p |

|---|---|---|---|---|---|---|---|---|

| (Intercept) | 17.147368 | 1.124602 | 0.95 | 14.85062 | 19.44411 | 15.247492 | 30 | 0.000000 |

| am_f1 | 7.244939 | 1.764422 | 0.95 | 3.64151 | 10.84837 | 4.106127 | 30 | 0.000285 |

We can also compute the predicted output values of our model at given input variable values:

lm3a_means <- estimate_means(lm3a, at = "am = c(0, 1)")

lm3a_means | am_f | Mean | SE | CI_low | CI_high | t | df |

|---|---|---|---|---|---|---|

| 0 | 17.14737 | 1.124602 | 14.85062 | 19.44411 | 15.24749 | 30 |

| 1 | 24.39231 | 1.359578 | 21.61568 | 27.16894 | 17.94108 | 30 |

If we were not to specify the values of am which we would like to get predictions for, the default of the function would select 10 values, spread across the range of am. For numeric variables, this is usually fine. However, for nominal variables - and am is in fact a nominally scaled variable - we insist that we want predictions for the levels of the variable only, that is for 0 and 1.

lm3a_means <- estimate_means(lm3a)

plot(lm3a_means)

Note that we should have converted am to a factor variable before fitting the model. Otherwise, the plot won’t work.

Here’s a more hand-crafted version of the last plot, see Fig. Figure 4.6.

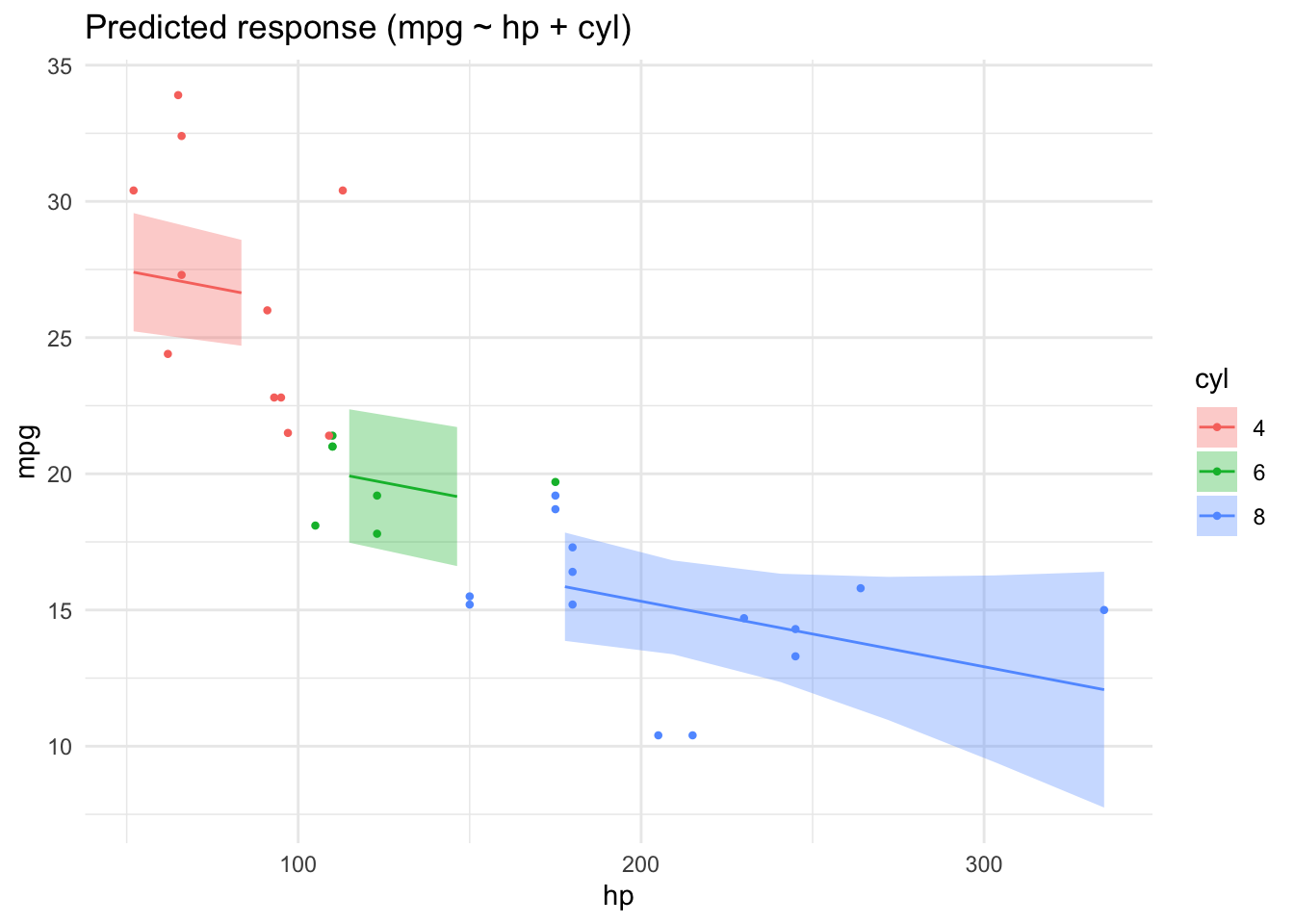

4.7.3 One metric and one nominal predictor

mtcars2 <-

mtcars %>%

mutate(cyl = factor(cyl))

lm4 <- lm(mpg ~ hp + cyl, data = mtcars2)

parameters(lm4)| Parameter | Coefficient | SE | CI | CI_low | CI_high | t | df_error | p |

|---|---|---|---|---|---|---|---|---|

| (Intercept) | 28.6501182 | 1.5877870 | 0.95 | 25.3976840 | 31.9025524 | 18.044056 | 28 | 0.0000000 |

| hp | -0.0240388 | 0.0154079 | 0.95 | -0.0556005 | 0.0075228 | -1.560163 | 28 | 0.1299540 |

| cyl6 | -5.9676551 | 1.6392776 | 0.95 | -9.3255631 | -2.6097471 | -3.640418 | 28 | 0.0010921 |

| cyl8 | -8.5208508 | 2.3260749 | 0.95 | -13.2855993 | -3.7561022 | -3.663188 | 28 | 0.0010286 |

lm4_pred <- estimate_relation(lm4)

plot(lm4_pred)

4.7.4 Watch out for Simpson

Beware! Model estimates can swing wildly if you add (or remove) some predictor from your model. See this post for an demonstration.

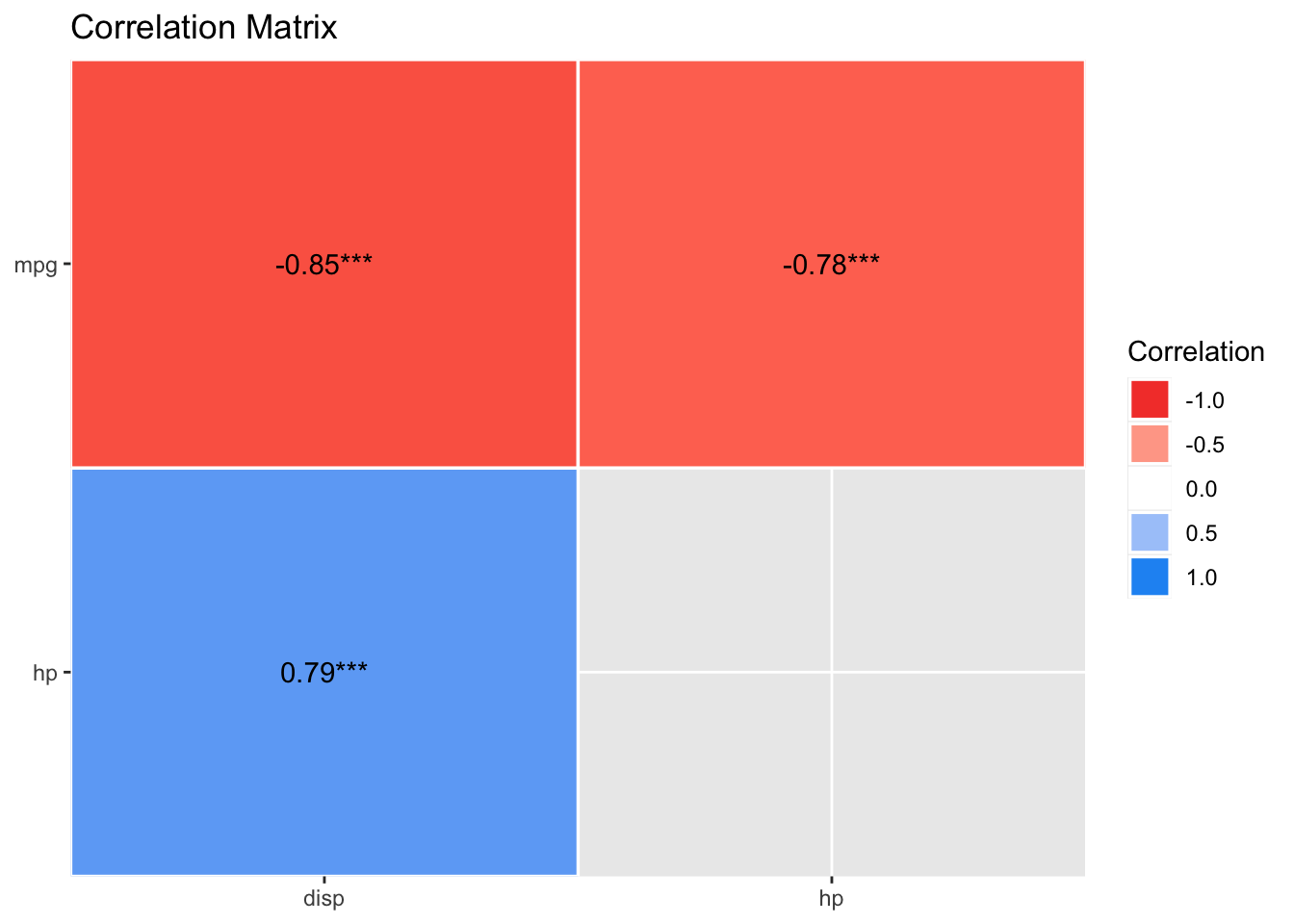

4.8 What about correlation?

Correlation is really a close cousin to regression. In fact, regression with standardized variables amounts to correlation.

Let’s get the correlation matrix of the variables in involved in lm4.

lm4_corr <-

mtcars %>%

select(mpg, hp, disp) %>%

correlation()

lm4_corr| Parameter1 | Parameter2 | r | CI | CI_low | CI_high | t | df_error | p | Method | n_Obs |

|---|---|---|---|---|---|---|---|---|---|---|

| mpg | hp | -0.7761684 | 0.95 | -0.8852686 | -0.5860994 | -6.742388 | 30 | 2e-07 | Pearson correlation | 32 |

| mpg | disp | -0.8475514 | 0.95 | -0.9233594 | -0.7081376 | -8.747151 | 30 | 0e+00 | Pearson correlation | 32 |

| hp | disp | 0.7909486 | 0.95 | 0.6106794 | 0.8932775 | 7.080122 | 30 | 1e-07 | Pearson correlation | 32 |

plot(summary(lm4_corr))

4.9 Exercises

🧑🎓 I want more!

👨🏫 Checkout all exercises tagged with “regression” on datenwerk. Pro-Tipp: Use the translation function of your browers to translate the webpage into your favorite language.

4.10 Case study

- Prices of Boston houses, first part

- Modeling movie succes, first part

4.11 Lab

Get your own data, and build a simple model reflecting your research hypothesis. If you are lacking data (or hypothesis) get something close to it.

4.12 Going further

Check-out this chapter of my intro stats book to get an overview on statistical modeling using basic regression technieques. Please use your browser’s translation feature to render the webpages into your favorite language.

An accessible treatment of regression is provided by Ismay & Kim (2020).

Roback & Legler (2021) provide a more than introductory account of regression while being accessible. A recent but already classic book (if this is possible) is the book by Gelman et al. (2021). You may also benefit from Poldrack (2022) (open access).

For a gentle introduction to the basics of modelling, see ModernDive Chap. 5.0 (Ismay & Kim, 2020), and get the R code here. For slightly more advanced topics on linear regression such as multiple regression and interaction, see ModernDive Chap. 6, and get the R code here.